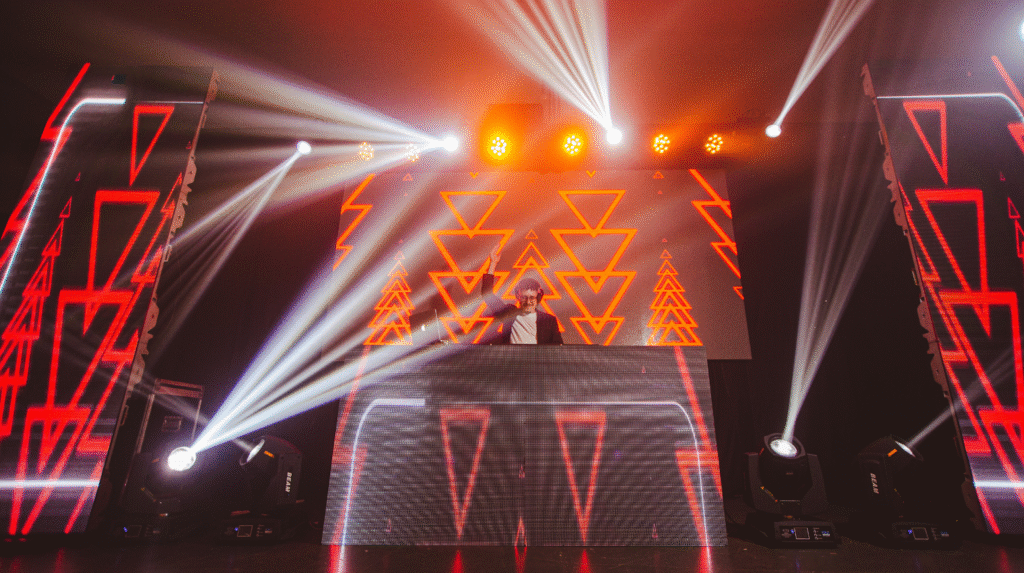

Walk into any electronic music show these days, and the visual spectacle hits almost as hard as the bass. Gone are the days when concert visuals were little more than looping video clips and unsophisticated lighting rigs. Over the past several years, a real transformation has taken root; live visuals and lights don’t just play along in the background anymore.

With high-powered software at their disposal, artists and venues shape entire multi-sensory worlds in real time, weaving together sight, sound, and the energy of the crowd itself. Festival stats back it up: as of last year, around 85% of big European electronic shows used dynamic, real-time visuals—a leap from less than half back in 2017.

Real-time response and participation

Advances in live visual systems increasingly focus on real-time responsiveness to musical input and audience conditions. In this context, expectations around interactivity and visual synchronization have been shaped in part by digital entertainment platforms, such as sweet bonanza, which are often referenced in broader discussions about responsive interface design.

Software tools like TouchDesigner or HeavyM process audio data—including tempo changes and frequency patterns—and translate these inputs into corresponding visual parameters. As a result, screens and projections update dynamically in coordination with the music during a performance.

Big-name acts like Deadmau5 have upped the ante with setups such as CUBE V3. This beast of a stage piece doesn’t just sit there looking cool; it shifts and morphs based on the mood and narrative arc of the show.

There’s more, too; some newer festivals and performances even invite the crowd to jump in, letting them tap or swipe on their phones, or wave their hands to influence what plays out onstage. According to a recent study in Frontiers in Virtual Reality, most festival-goers at major events felt like they were genuinely part of the visual action, a far cry from the days when visuals were just passive wallpaper.

Multi-media immersion on stage

Step backstage and it’s a tangle of tech: projection mapping, shifting LEDs, kinetic lights, surround sound. With software like HeavyM, designers can now project shapes and images onto weird architectural surfaces, making each venue a living, responsive piece of the show.

VR and mixed reality aren’t just gimmicks; they’re increasingly showing up at large festivals, with productions like Tomorrowland and artists such as Eric Prydz building immersive surroundings out of walls of LEDs, light tunnels, and 3D sound.

Spatial audio takes things a step further, blending audio and video so tightly the crowd can practically feel the music moving around them. Sometimes, VR headsets let fans’ own gaze help shape the visuals in real time, mixing their attention into the event itself.

According to the latest from NIME, shows are becoming social, multi-sensory ecosystems where thousands can plug into the same pulse, each in their own way. Visuals aren’t only for atmosphere; they’re central to the integration.

Emotional mapping and cross-modal design

These days, some shows set out to map feelings. Software engines don’t just react to beats—they read the emotional flow of a set, assigning visuals by mood. A dreamy section? Maybe a haze of warm colors or soft shapes. Building tension? The screens fracture, hues go colder, lines stutter in sync with the rising sound. With AI and live mapping on the job, visuals can echo a song’s heartbreak or euphoria in real time.

Experiments in Europe show this can make people feel more connected, more locked in emotionally; something like three-quarters of festival-goers reported feeling a bigger pull toward performances where the visuals tracked mood, not just rhythm.

Tools and future directions

Nowadays, nearly every live visual show hinges on flexible, modular tech. TouchDesigner sits at the heart of many massive events. HeavyM’s real-time tricks let even smaller acts coat walls in music-reactive animation. VR and MR are steadily making inroads, especially in North America.

Programmable LED panels, the backbone for many years, are getting mixed in with projection, lasers, and audience-participation tools. More artists are skipping the pause between songs; software allows seamless visual storytelling without missing a beat.

A big shift seems to be on the horizon: soon, crowds won’t just watch. They’ll help create, whether waving, tapping, or sharing biofeedback.

One thing’s crystal clear: what happens on stage now depends as much on audience energy and desire for connection as on the technology running the show. The interactive visual integrations show no sign of slowing down.